Technical Description Use Case VFU-ELF: Unterschied zwischen den Versionen

| (40 dazwischenliegende Versionen von 2 Benutzern werden nicht angezeigt) | |||

| Zeile 1: | Zeile 1: | ||

| − | + | Semantic CorA was developed in close connection to the use case of analyzing educational lexica in research on the history of education. In this context, two research projects are realised in the vre. | |

| + | [[File:entities_CorA.png|right|thumb|250px|Entities for Lexica]] | ||

| + | =Research Data= | ||

| + | [[File:Extension_OfflineImportLexicon.PNG|right|thumb|250px|OfflineImportLexicon]] | ||

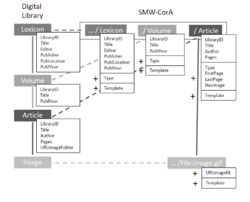

| + | In both research projects the examined data are educational lexica. A lexicon can be subdivided four more entities: a lexicon otself, a volume, an article (lemma) and the image file. Each entity has its own properties (see image "Entities for Lexica"). On this basis, we created a data model which made it possible to describe the given data. | ||

| − | |||

| − | |||

==Import and Handling== | ==Import and Handling== | ||

| − | We were able to import bibliographic metadata (describing the data down to the article level) and the | + | We were able to import bibliographic metadata (describing the data down to the article level) and the digitized objects from [http://bbf.dipf.de/digitale-bbf/scripta-paedagogica-online/digitalisierte-nachschlagewerke Scripta Paedagogica Online (SPO)] which was accessible via an OAI-Interface; in an automated process, nearly 22,000 articles from different lexica were imported that were described with the data model and were ready for analysis. To add more articles manually, we developed the extension [[Semantic_CorA_Extensions#OfflineImportLexicon OfflineImportLexicon]] which provided a standardized import routine for the researchers. |

| − | + | ||

| + | ==Data Templates of Lexica== | ||

| + | The usage of templates offers a centralised data management. Using these feature, a baseline for the data import and display is provided. Following the described entities of a lexicon, each level of entity contains several data. | ||

| + | |||

| + | The [[Template:Cora_Lexicon_Data|Cora Lexicon Data]] template is called through the following format: | ||

| + | |||

| + | <pre> | ||

| + | {{Cora Lexicon Data | ||

| + | | Title = | ||

| + | | Subtitle = | ||

| + | | Place of Publication = | ||

| + | | Editor = | ||

| + | | Coordinator = | ||

| + | | Author = | ||

| + | | Publisher = | ||

| + | | Language = | ||

| + | | Edition = | ||

| + | | URN = | ||

| + | | Year of Publication = | ||

| + | | IDBBF = | ||

| + | | Has Volume = | ||

| + | }} | ||

| + | </pre> | ||

| + | |||

| + | The [[Template:Cora_Volume_Data|Cora Volume Data]] template is called through the following format: | ||

| + | |||

| + | <pre> | ||

| + | {{Cora Volume Data | ||

| + | | Title = | ||

| + | | Subtitle = | ||

| + | | Volume Number = | ||

| + | | Place of Publication = | ||

| + | | Publisher = | ||

| + | | Editor = | ||

| + | | Language = | ||

| + | | URN = | ||

| + | | Year of Publication = | ||

| + | | IDBBF = | ||

| + | | Physical Description = | ||

| + | | Part of Lexicon = | ||

| + | | Numbering Offset = | ||

| + | | Type of Numbering = | ||

| + | }} | ||

| + | </pre> | ||

| + | |||

| + | The [[Template:Cora_Lemmata_Data|Cora Lemmata Data]] template is called through the following format: | ||

| + | <pre> | ||

| + | {{Cora Lemmata Data | ||

| + | | Title = | ||

| + | | Subtitle = | ||

| + | | Part of Lexicon = | ||

| + | | Part of Volume = | ||

| + | | Language = | ||

| + | | URN = | ||

| + | | IDBBF = | ||

| + | | Original Person = | ||

| + | | First Page = | ||

| + | | Last Page = | ||

| + | | Has Digital Image = | ||

| + | | Category = | ||

| + | }} | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | The [[Template:Cora_Image_Data|Cora Image Data]] template is called through the following format: | ||

| + | |||

| + | <pre> | ||

| + | {{Cora Image Data | ||

| + | | Original URI = | ||

| + | | Rights Holder = | ||

| + | | URN = | ||

| + | | Page Number = | ||

| + | | Page Numbering = | ||

| + | | Part of Article = | ||

| + | | Part of TitlePage = | ||

| + | | Part of TOC = | ||

| + | | Part of Preface = | ||

| + | }} | ||

| + | </pre> | ||

| + | |||

| + | == Mapping with Semantic Web Vocabularies == | ||

| + | SMW offers per default the [https://semantic-mediawiki.org/wiki/Help:Import_vocabulary import of Semantic Web Vocabularies]. To describe the four entities of the lexicon (lexicon, volume, lemma, image) and additionally further main entities like persons, corporate bodies and concepts, we used the following range of vocabularies: | ||

| + | * [[MediaWiki:Smw_import_bibo|BIBO]] | ||

| + | * [[MediaWiki:Smw_import_gnd|GND]] | ||

| + | * [[MediaWiki:Smw_import_rel|REL]] | ||

| + | * [[MediaWiki:Smw_import_skos|SKOS]] | ||

| + | * [[MediaWiki:Smw_import_foaf|FOAF]] | ||

| + | |||

| + | By clicking the link the imported and used properties of the ontology at the VFU-ELF are shown in detail. | ||

| + | |||

| + | == Mainpage == | ||

| + | |||

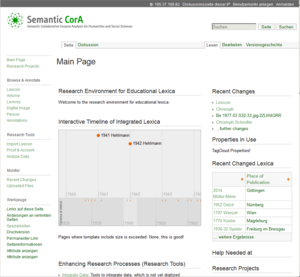

| + | At the mainpage central information of the currents projects and of the different research actions are offered (Recent changes, changed lexica, projects, help needed, timeline of lexica etc.). Hetre is a deafault mainpage which offers a two coloumn page [[Main_Page_Default]]. Please have as well a look at the design templates [[Templeate:Box1]] and [[Template:Box2]] and the adjusted [[Cora_Skin_implementation|skin]]. | ||

| − | + | [[File:VRE_ELF_Mainpage.PNG|right|thumb|300px|Mainpage of VRE ELF for Educational Lexica Research]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

__NOTOC__ | __NOTOC__ | ||

| + | __NOEDITSECTION__ | ||

Aktuelle Version vom 29. April 2014, 20:59 Uhr

Semantic CorA was developed in close connection to the use case of analyzing educational lexica in research on the history of education. In this context, two research projects are realised in the vre.

Research Data

In both research projects the examined data are educational lexica. A lexicon can be subdivided four more entities: a lexicon otself, a volume, an article (lemma) and the image file. Each entity has its own properties (see image "Entities for Lexica"). On this basis, we created a data model which made it possible to describe the given data.

Import and Handling

We were able to import bibliographic metadata (describing the data down to the article level) and the digitized objects from Scripta Paedagogica Online (SPO) which was accessible via an OAI-Interface; in an automated process, nearly 22,000 articles from different lexica were imported that were described with the data model and were ready for analysis. To add more articles manually, we developed the extension Semantic_CorA_Extensions#OfflineImportLexicon OfflineImportLexicon which provided a standardized import routine for the researchers.

Data Templates of Lexica

The usage of templates offers a centralised data management. Using these feature, a baseline for the data import and display is provided. Following the described entities of a lexicon, each level of entity contains several data.

The Cora Lexicon Data template is called through the following format:

{{Cora Lexicon Data

| Title =

| Subtitle =

| Place of Publication =

| Editor =

| Coordinator =

| Author =

| Publisher =

| Language =

| Edition =

| URN =

| Year of Publication =

| IDBBF =

| Has Volume =

}}

The Cora Volume Data template is called through the following format:

{{Cora Volume Data

| Title =

| Subtitle =

| Volume Number =

| Place of Publication =

| Publisher =

| Editor =

| Language =

| URN =

| Year of Publication =

| IDBBF =

| Physical Description =

| Part of Lexicon =

| Numbering Offset =

| Type of Numbering =

}}

The Cora Lemmata Data template is called through the following format:

{{Cora Lemmata Data

| Title =

| Subtitle =

| Part of Lexicon =

| Part of Volume =

| Language =

| URN =

| IDBBF =

| Original Person =

| First Page =

| Last Page =

| Has Digital Image =

| Category =

}}

The Cora Image Data template is called through the following format:

{{Cora Image Data

| Original URI =

| Rights Holder =

| URN =

| Page Number =

| Page Numbering =

| Part of Article =

| Part of TitlePage =

| Part of TOC =

| Part of Preface =

}}

Mapping with Semantic Web Vocabularies

SMW offers per default the import of Semantic Web Vocabularies. To describe the four entities of the lexicon (lexicon, volume, lemma, image) and additionally further main entities like persons, corporate bodies and concepts, we used the following range of vocabularies:

By clicking the link the imported and used properties of the ontology at the VFU-ELF are shown in detail.

Mainpage

At the mainpage central information of the currents projects and of the different research actions are offered (Recent changes, changed lexica, projects, help needed, timeline of lexica etc.). Hetre is a deafault mainpage which offers a two coloumn page Main_Page_Default. Please have as well a look at the design templates Templeate:Box1 and Template:Box2 and the adjusted skin.